This is the first in a series of posts about the design and implementation of a search engine for Boards.ie.

Boards.ie recently launched a new search engine – http://www.boards.ie/search/ – which is built upon Amazon Web Services using Solr with PHP as the glue.

Currently, Boards.ie users are searching nearly 30 million posts almost a million times a month.

A little background

Solr has been used in production by Distilled Media (formerly the Daft Media Group) for a couple of years now, first being tested on LetsRent.ie, then for powering the maps functionality on Daft.ie, and even more recently as the core technology for the relaunch of Adverts.ie.

Boards.ie’s usage has been an interesting look into the future for Daft.ie and Adverts.ie; with nearly 26 million posts – “documents” in Solr nomenclature – and growing by more than a million again every month, it presents a challenge in providing a reliable, affordable, relevant and fast search solution to our users.

Boards.ie has relied on MySQL’s “Fulltext” search as long as it has been using MySQL (forever). As time has passed, more and more restrictions had to be placed on search in order to keep it online, and the sheer volume of data was making results less and less useful to people well used to using Google search.

There have been a number of challenges to solve along the way:

Can we make search faster and more relevant with what we have already?

This is sort of an obvious question, but we didn’t want to jump the gun and leap into an entirely new technology stack if it were possible for us to overhaul what was currently there.

The answer was not particularly straight forward, but any solution that involved keeping the MySQL fulltext engine as our primary search system involved a lot of new beefy hardware and a lot of the same slowness, punting relevance issues down the line. As a result, we decided that it was not a realistic option.

Which search technology should we use?

There were only a couple of choices available to us when we started working on this project. First, we wanted to use Open Source – there are plenty of good reasons to choose either open source or proprietary software depending on your requirements, and we have experience with both. We wanted the flexibility to hack away at what we were using and share back to the community where possible. We wanted to be able to scale horizontally without worrying about skyrocketing licensing costs. We wanted access to the huge amount of expertise and goodwill that comes with open source projects, as well as experimental work done by other developers.

It also had to be compatible with the rest of our infrastructure and fit with our areas of experience – we’re pretty evenly split between FreeBSD and Linux on the infrastructure side; we didn’t want to introduce an entirely new stack (Microsoft) if it could be avoided.

There were two major options for us as a result, SphinxSearch and Apache Solr. SphinxSearch appeared to have successful deployments with vBulletin (our forum software), but all the experience in the Distilled Group was with Solr. Daft.ie had already deployed successful Solr installations, so we decided to go with what we had some experience with.

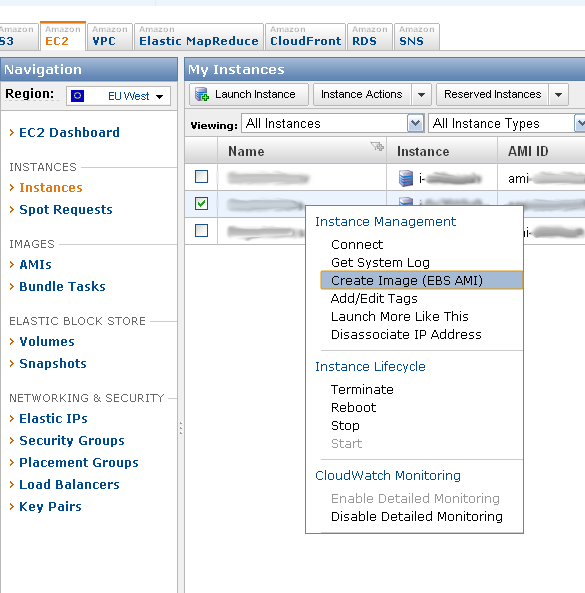

What infrastructure should we use?

We had the option of building using our own hardware, or making the leap to introducing cloud infrastructure. Moving partially into the cloud introduced a data transportation issue – how were we going to get data into the cloud while keeping our core infrastructure in Digiweb? Latency and data transfer costs were likely to be show stoppers. Amazon had recently introduced a new service in beta, Simple Notification Service (SNS). We were pretty sure we could combine this with their Simple Queue Service (SQS) to create a scalable message routing and queuing system for posts. With a few hiccups and some conversation with Amazon along the way, we were successful. As it turns out, getting the data out of our core infrastructure and into Amazon is the cheapest component of the entire operation. For the tens of thousands of messages we send to Amazon on a daily basis, we’re billed a couple of dollars a month.

What are the tradeoffs?

There are always tradeoffs.

The advantages and disadvantages of SQL and NoSQL solutions has been covered at length, I’ll just highlight the areas that were significant for us.

With MySQL, our data was/is potentially consistent. For our usage, it is consistent enough for us to call it the canonical source of our data. Occasionally it chews up a post or thread, but rarely one that can not be rewritten.

The information is also effectively instantly available upon submission. There’s a lag of up to a couple of seconds occasionally as the data waits to propagate across our MySQL cluster due to an extended lock of some sort, but it’s generally not a particularly noticeable to the average user.

With the new search system we have sacrificed some consistency and how immediately data is made available to the searcher. For instance, posts sometimes (more frequently than with MySQL) do not make it into the search system. There is multiple redundancy built into the system to limit this, but occasionally a full resynchronization is required. We judged this to be an acceptable cost.

Immediacy has been sacrificed such that it usually takes about 2-5 minutes for a new post or update to an old one to become available in searches, occasionally an hour, infrequently a day, and very infrequently a couple of months (relating back to consistency). We determined that the normal search profile does not require the absolute newest data, only the most relevant.

At the time we made these decisions, losing real time search was the one I was least happy about, for most users it does not appear to be a concern. In hindsight it’s almost obvious why – Boards.ie is a gigantic repository of historical information. The value is in being able to search this rich back catalogue of conversation, opinion and information, not just the most recent.

Even at that, recency is only sacrificed when measured in seconds.

Architectural choice

As useful as Solr has been for us, it’s a bit of a black box in our architecture. Trying to run Solr through a debugger remotely is a gigantic pain in the arse and not something I had much success with. Fortunately, the Jetty error logs are enough to illuminate most problems:

- Memory management. It’s perhaps unfair to take issue with Solr over this – after all, it’s a far cry from MySQL’s famously labyrinthine memory usage configuration options, and Solr’s problems are really the restrictions of the JVM – but it feels like a single purpose machine with one major application should be able to figure out how much RAM to dedicate to disk cache and how much the application should get for optimal performance.

- XML everywhere. It’s inescapable, and again, this can be ascribed to Java culture, but damn.

- Fixed schema. I suppose this is an ideological argument, but during development this was tedious.

- The book is two inches thick and you need to know it. If you’re building a search service that you expect to take a lot of traffic and contain a lot of documents (why else would you be interested, right?) you will simply have to know all (or at least a good chunk of) the features, quirks, optimizations and architectural choices.

Solr is now a pretty well field-tested application in Distilled, and I’m pretty sure I could rapidly prototype an installation and have it up and running in production inside a week for another site, but I would prefer to investigate ElasticSearch the next time I am revisiting search options.

Further development

During the course of development, I wrote a small node.js server to speed up the relay of post data from our web servers to Amazon SNS. Due to the limitations of deploying node.js on our FreeBSD architecture at the time, this system is, unfortunately, yet to be implemented. Once it is, however, write operations on the site should speed up noticeably for end users. Once this is up and running and has the bugs ironed out, it will be released as an open source project on github.

A side effect of this is that I contributed some code to the AWS Library node.js project, something I would like to continue doing.

I have also amended chunks of the Solr PHP client with a sharded Solr deployment in mind, but it’s quite clunky and I would prefer to have another stab at it, maybe writing my own Solr PHP client and making it available.

In the next posts on this topic, I plan to dive more deeply into the specifics of our implementation, and hopefully release some code in tandem.